By GNA Editor (John Scheirs) and Alex Gersch, Director, Layfield Australia

The next major focus for geotechnical and geosynthetics engineers will be the increased use of artificial intelligence tools in the geosynthetics construction sector.

From writing project specifications to generating detailed design reports, generative AI is being increasingly used by geotechnical consultants.

Lawyers will need to navigate the complexities these technologies introduce. This includes addressing issues related to data ownership, liability for software errors leading to incorrect design specifications, and the implications of automated decision-making on traditional roles in the construction process.

Information modelling and artificial technology are increasingly being used in designing and planning large construction projects, but this gives rise to new and different risks for engineering consultants to consider.

Ensuring clarity and accuracy in design reports will be crucial as these innovative AI tools and practices become more commonplace.

Adapting to this new technology will shape how geotechnical construction projects are executed and will also redefine the legal and contract law landscape.

While AI technology is the next disrupter in the construction industry who carries the liability for incorrect design calculations and rogue AI responses?

Fake Research Reports

AI-generated fake geotechnical research papers are currently swarming online, which is posing a threat to academic search engines and unsuspecting engineers who cite and reply on the publications.

Academic journals, archives, and repositories are seeing an increasing number of questionable research papers clearly produced using generative AI.

They are often created with widely available, general-purpose AI applications, most likely ChatGPT, and mimic scientific writing.

Some “published” papers included copied content from the ChatGPT bots and include phrases like “I don’t have access to real-time data” and “as of my last knowledge update” which is often seen to appear at the OpenAI’s chat bots.

Most of these GPT-fabricated papers were found in non-indexed journals and working papers, but some cases included research published in mainstream scientific journals and conference proceedings.

This can lead to major credibility concerns and tarnish the image of design consultants if the spread of fake content remains untackled and incorporated into new designs.

Beware the Limitations of AI

Before using any artificial “intelligence” (AI), the user should understand that AI is not intelligent, but rather a next generation search engine that can rapidly search, compare, collate, and compile multiple pieces of information into a document.

AI should never be used to generate technical specifications, test methods, test reports, technical articles, or research papers without the author having a thorough understanding of the subject, and an in-depth technical knowledge.

The author must validate and cross check in detail every piece of information in the resulting document. Prior to the advent of AI, this was already a problem with the “cut and paste” generation of specifications with incorrect material values, test methods and reporting requirements as examples. AI has now exacerbated this risk.

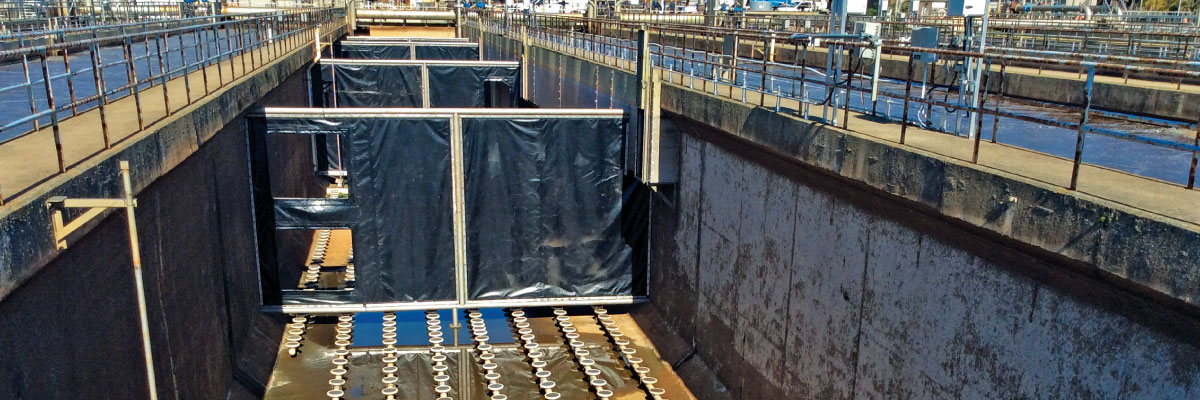

It is conceivable that an AI generated specification may result in an inappropriate material being used in a critical containment application that subsequently fails catastrophically. In these types of applications catastrophic failure can result in potential loss of life, significant environmental damage, significant financial costs and major reputational damage.

When writing technical specifications, the authors should always consult directly with manufacturers, testing authorities, and technical peers to ensure the document is realistic, appropriate, fit for purpose and achieves the required outcomes required of the asset owner.

Related Articles

View All News